https://medium.com/@rebane/could-ai-have-saved-the-cyclist-had-i-programmed-the-uber-car-6e899067fefe

Recent incident where Uber car was involved in an accident where a cyclist got killed has sparked many comments and opinions. Many blame Uber

for incompetent system, others think the accident was trivial to

prevent. I do agree that there is a technology to avoid such accidents.

But I would also like to point out why the problem is not as easy for an

autonomous system to solve as it is for regular city car. So, lets

answer “Mercedes has a night vision system, why Uber did not use one?”

Before I continue I will emphasize that I do not know what was the cause of accident nor do I claim that it was inevitable. In addition I do not want to blame or justify anyone. That said, I will discuss why this problem is much harder for an AI-based system than it is for collision avoidance system in your Seat Ibiza.

Regular collision avoidance (CA) system that can be found in almost any new vehicle is a deterministic and single purpose system.

“Single purpose” means that the system has only one goal — to brake

when the vehicle is about to collide; “deterministic” means that it is

programmed to take certain action (i.e., hit brakes) when certain kind

of signals are detected. It will always produce the same kind of

reaction for the same kind of signal. There are also CA systems that

have some probabilistic behaviour depending on the environment, but in a

large scale, all CA systems are rather straightforward: when the

vehicle approaches something in front of it with unreasonable speed, it

hits the brakes! You can create this system using simple IF-statements

in a program code.

Why is AI-based system different? Artificial intelligence is a capability of a system to demonstrate cognitive skills such as learning and problem solving (see Wikipedia) — AI is not preprogrammed to monitor a known input from a sensor to take a predefined action.

So instead of defining what to do in known circumstances we train AI by

giving the algorithm a lot of data and ask it to learn what to do. This

is machine learning.

If we build a collision avoidance system using machine learning, we’ll

probably achieve near perfect performance — but the system would be

still single purpose system. It would be able to brake but not to

navigate.

Navigation

is composed of sensing and interpreting the environment, making

decisions, and taking action. Environment perception includes path

planning (where to drive?), obstacle detection, and trajectory

estimation (how are detected objects moving?). This is not an exhaustive

list. Now we see that collision avoidance is only one task of the many

and the system has many questions at the same time: where am I going,

what do I see, how to interpret this, are any objects moving, how fast

are they moving, will my trajectory cross with someone else's etc?

Such problem of autonomous navigation is too complex to be solved by using only simple IF-ELSE statements

in program code and map senor signal readings to actions. Why? To

measure everything that is needed for such a task, a vehicle must have

tens of different sensors. The purpose is to have a comprehensive field

of view and to compensate the weaknesses that individual sensors have

(more on that soon). If we now calculate how many different combinations

of measurements these sensors are producing, we’ll clearly see that we

need self-learning system. It is far too complex for a human to model every possible input combination.

Further, this self-learning system will most likely base it’s decisions on probabilities.

If it notices something on the road, it will consider all possible

options and attaches probabilities to each. For example, 5% probability

that the object is a dog and 95.7% probability that it is a lorry will

result in a decision that the object is a lorry. But what if sensor input is contradictory?

This

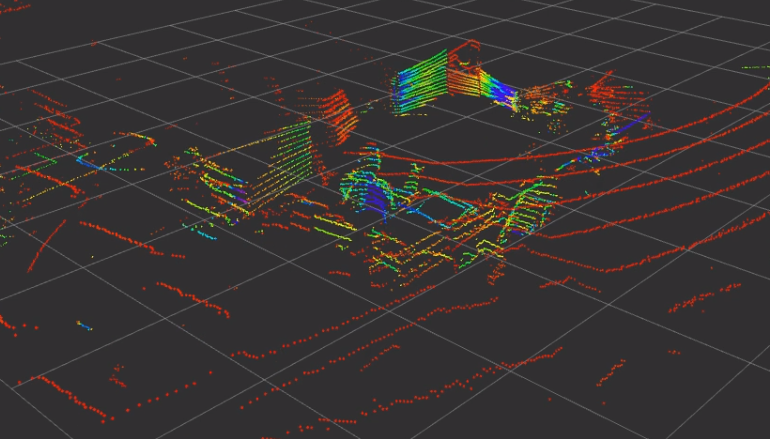

can easily happen. For example, a normal video camera can see a

reasonably close object in a great detail, but only in two dimensions. A

LIDAR, a kind of laser, will see the same object in three dimensions,

but in less detail and without color information (see the image below).

We can then use several video cameras to reconstruct 3D scene from the

images and compare it with LIDAR “image”. A combined result should be

more trustworthy. But video cameras are sensitive to light

conditions — even a shadow might interfere with some segments of the

scene and produce low quality output. This is where a good system

recognises the difficulty and says that in this situation it will trust

LIDAR output more that video camera output. For some other part of the

scene, the situation might be vice versa. The areas where both sensors agree are where the measurement has the highest quality.

What

if camera thinks it is a lorry and LIDAR assesses that it is a dog and

both inputs are trusted equally? This is probably the toughest case

indeed, but far from impossible to solve. Modern CA systems are

operating with memory, they have maps and registers of what they have

seen. They keep track of recorded objects from image to image.

If two seconds ago both sensors (or more precisely, the algorithms that

interprets the sensor readings) agreed the object to be a lorry and now

one of them thinks it’s a dog, it will still be considered a lorry

until there is stronger evidence available. Please keep this example in

mind when we return to the Uber case later.

A

little recap. So far we have covered that an AI must process the input

from many different sensors, it must evaluate both the quality of sensor

input and the quality of constructed understanding of the scene.

Sometimes different sensors give different prediction and not all

sensors are usable at any given time. The system also has a memory which

affects the process, just like for humans. It must then fuse this information to form a single understanding about the situation and use this to drive the car.

What could go wrong, can we trust such AI system? The quality of the system is a combination of its architecture

(which sensors are used, how sensor information is processed, how the

information is fused, which algorithms are used, how decisions are

evaluated etc) and the nature and amount of data that is used to train it.

Even with perfect architecture, many things could go wrong if we have

too few data. It is like sending unexperienced worker to execute a

difficult task. More data means more chances to learn and leads to

better decisions. Unlike humans, an AI can pool hundreds of years worth of experience and eventually master the driving better than any human could do.

So

when might such system still kill a pedestrian? If you scroll up this

article you’ll notice that there are many possible situations where

wrong assessment of the situation could lead to malfunction, but there

are some cases where the decision might go wrongly more apparently.

- Firstly, if the system has not seen enough similar data it might not be able to understand the situation correctly.

- Secondly, if the environment is difficult to sense and sensor inputs have low confidence or they send mixed signals.

- Thirdly, if the understanding of sensor input contradicts the understanding based on system memory (e.g., the object has been considered a lorry during the previous time steps, but now one sensor thinks it is a dog)

- And finally we can not exclude the possibility of malfunction.

I would say that any reasonably designed system is mostly able to handle any of those problems separately, but:

- resolving the controversy takes time;

- co-occurrence of several contributing factors might lead to wrong decisions and behaviour.

Before looking those situations more closely, let’s briefly cover what modern sensors are capable of and what they are not.

Making sense of sensors

Many

claim that technology is so advanced that Uber car should have

unambiguously identified the pedestrian crossing the road while pushing a

cycle at the wrong place while stepping out from the darkness into the

illuminated area. Which sensors could and could not measure that? And I

mean just measure, not understand what they measure.

- Camera can not see in the dark. Camera is a passive sensor which only works by registering illumination from the environment. I listed this first, as there are so many opinions about how good today’s cameras are and how they see in the dark (e.g., HDR camera). In fact, they might see under poor lighting conditions, but there must be some light. There are also infrared or infrared assisted cameras that will illuminate the environment, but these are superseded by LIDAR and hence mostly not used on autonomous vehicles. Normal camera will have hard time to sense something in the darkness behind a bright illuminated area.

- Radar can easily detect moving objects. It uses radio waves and measures how they reflect back from the object. Reflected waves from the moving target have noticeable difference in the wavelength, caused by Doppler shift. It is difficult to use regular radar for measuring small, slowly moving or standstill objects as there is only a small difference between waves reflected back from the still object and waves reflected back from the ground.

- LIDAR works similar to radar, but emits laser light and can easily map any surface in three dimensions. For wide range 3D imaging most LIDARs are rotating and scanning the environment a little like a copy machine is scanning a paper. As LIDAR emits light it does not depend on external illumination and hence can see in the dark. While expensive LIDARs have incredibly high resolution this also requires incredibly powerful computer to reconstruct the image in 3D. So when you see people claiming that LIDAR can work in 10 Hz (meaning 10 3D scans per second) then ask them if they can also process that data in 10 Hz. While almost every autonomous navigation system on the market uses LIDAR, Elon Musk thinks LIDAR is only good in short term and Tesla is not using it.

- Infrared is a smaller (but older) brother of LIDAR. Thermal infrared can distinguish object by temperature, and hence is very sensitive to… temperature. So if the sun heats up the road you might not be able to tell it apart from other objects of the same temperature. This is why it has limited use in autonomous navigation.

- Ultrasonic sensors are very good for collision avoidance at low speeds. They are used in most parking sensors on the market, but they have a small range. So this is why your car’s “city collision avoidance” system that also uses ultrasonic sensors will not work on the highway — it would only be able react when it is too late. Collision avoidance systems that work on the highway are mostly based on radar, like Tesla’s.

What might go wrong with this system?

- For starters, lets take a look at this picture. What do you notice?

Did

you notice the cyclist? He is obviously not trying to rush between the

cars but will stop and wait until the cars have passed and then crosses

the road:

So the first explanation might be that the algorithm has seen many cyclists waiting on the road for the car to pass.

If an AI would halt for each such cyclist we would consider it a very

poor AI indeed. So can it be that it made a decision not to stop as it

had never seen a cyclist stepping in front of car, but had seen many

waiting the car to pass? This explanation assumes that everything was

working fine, just that there was not enough data to tell the AI that a

cyclist might sometimes step in front of the vehicle. Although Waymo

(then Google car) claimed 2 years ago that they detect cyclists and even their gestures, cyclist detection (and prediction) remains an open research question.

2. Lack of understanding. Take a look at this picture:

Assume

our system is able to tell apart from cyclist that gives way and the

one that does not. The cyclist involved in Uber accident was at the

blind spot. It means that only LIDAR might have been able to detect it

on time to stop the car (i.e., detect before she stepped into

illuminated area). Perhaps it did detect, but cyclist detection from 3D point cloud is much harder task than cyclist detection from an image.

Did it understand it to be cyclist or any kind of object that moves

towards the car trajectory? Perhaps it did not and continued to operate

because objects on the other lane are normal. Similarly, if I was a

pedestrian on this image and did not fully understand what kind of

vehicle has just passed (as on the photo), I could still continue my

journey. Of course, if the vehicle halted it’s trajectory and decided to

drive towards me, I’d be in trouble. But in 99.99% of cases this is not

a reasonable assumption to make.

What happened after illumination?

There are many more reasonable and plausible explanations why the system was not well prepared to avoid the collision. But why didn't it brake when the cyclist was illuminated?

There is no easy answer for this. For certain, deterministic system

would have applied the brakes (though probably not avoid the collision).

If I was testing my car on the street, I’d now probably back it up with

such deterministic systems that run independently from AI. But let’s

take a look at AI.

As I said earlier, all modern

autopilots have memory and they trust their sensors to different extent

depending on the environment. Darkness is a challenging condition

as there is too little illumination to operate camera in real time with

full confidence. Hence it is highly likely, and in 99.99% of cases very

reasonable, that LIDAR input is more trusted at night. As we said

earlier the sensing output of a LIDAR is 10 times per second, but the

processing output really depends on the system, but is probably much

less. You can probably expect 1 scan per second on a decent laptop (this

estimation includes converting raw input to 3D image, locating the

objects from the image and understanding what they are). Using special

hardware will speed up the computation, but probably not up to 10Hz, at

least not on fine-grain resolution.

Let’s

now assume that the AI knows that there is an object in the dark and

trusts LIDAR data more than any other sensor at the time. When the

cyclist steps in front of the car, camera picks it up, perhaps other

sensors too. A deterministic system would hit the brakes as soon as the

signal is interpreted. But for AI this information might look like this:

An Unidentified Flying Object? Or perhaps atmospheric halo?

Lets compare it with autonomous driving AI. (1) On one hand the system

has a trusted sensor, a LIDAR, that tells the car there is nothing in

front of it (because of slow processing it has not seen the movement

yet). (2) Then it probably has a history of sensor measurements where

nothing indicates that any object was going to collide with the car. (3)

And finally, some sensors are telling that there is an obstacle in

front of the car and (4) perhaps algorithms are even able to classify

it. AI now needs to decide.

We must consider that the system is still probabilistic which means that each sensor has some error rate, the measurements are not 100% exact

and each prediction from the sensor data also has an associated error.

What might go wrong? If camera and LIDAR do not agree (or have not yet

been able to match the images) then the system lacks precise 3D data for

camera images and has to reconstruct it only from the images. Lets look

at the disturbing image of the cyclist in Uber accident:

Without 3D information the system can only rely on machine learning models to detect the object — and as a consequence does not know the distance to the object. How can anything not recognize the cyclist? Here are a few (annoying) thoughts.

- The person wears a black jacket which blends with the environment (see the yellow-dotted area). Many critics will claim that modern cameras are good enough to separate the jacket from the night. Yes indeed, but most machine learning algorithms are not able to train a model using 3–20 megapixel images. For real-time operation, the resolution is kept as low as feasible, 1000 x 1000 pixel resolution is probably overestimation, although some authors report being able to process 2M x 1M images, but still fail to detect a bicycle at night. Mostly, smaller resolution images are used or pixel values are averaged over some area, forming superpixels. So effectively, there is only a partially visible object.

- Without proper 3D information (which provides distance), fragments of the bicycle might be misclassified — see green and pink annotations on the image. When we (humans) look at the whole image, we immediately notice the person. When we look at the frame of the bicycle (annotated with pink dots), it resembles closely the taillights of a car (also annotated with pink). Returning to the discussion of probabilities — would the algorithm expect to see taillights or bicycle frame on the road at night in front of the car? It probably might have seen many taillights of different shape and much fewer number of bicycles and hence is biased towards classifying the object as a taillight.

- Lastly I have annotated a spot under the street lamp with orange color. You can find the equivalent color and shape from the picture.

So

if the error rate of camera was high enough, the autonomous system

might not have trusted it’s input. Perhaps a system might have predicted

a pink area to be a bicycle with 70% confidence and taillights with 77%

confidence? Not an unreasonable result given the circumstances.

I could continue with the listing, but I guess I have made my point: building

an AI that can autonomously drive is a really hard and challenging task

which is prone to errors due to complexity and available training data.

Also, implementing some of this functionality using deterministic algorithms is many orders of magnitude easier.

And it does seem a good idea to equip early self-driving cars with some

deterministic back-up systems to avoid collision. On the other hand, it

is 100% certain, that no-one can build a self-driving car using

deterministic approach.

Finally,

I’ll repeat again that I have no idea what caused the accident, perhaps

it was not related to any of the stuff I described here. But at least

when you see very critical comments in social media which claim that the

technology to prevent the accident existed 1–2–5–10–20 years ago, then

it is possible to acknowledge that this technology has little to do with

fully autonomous driving and it is far from being intelligent. Although

I admit it is hard to accept the fact that a technology exists but the

AI is not yet able to utilize it fully. That said, there is extensive research done on the subject, recent study identified more than 900 hazards that an AI must be able to account for.

So, could AI have saved the cyclist had I programmed the Uber car?

The answer is that no-one knows. The internals of modern AI system are far too complex to assess without having exactly the same data

as was available for the Uber car. In essence, my AI system, or yours,

or Waymo’s, or Tesla’s, would have made a probabilistic decision

too — otherwise it most certainly was not a fully autonomous system. And

perhaps, at least to some extent, making mistakes and learning from

them is inevitable for every intelligent system. That said, we are all

working hard to make autonomous driving safer.

Also, lets back up our AI’s with old school collision avoidance! Intelligence is not the same as perfection, at least for now.

No comments:

Post a Comment